Bridging Bayesian Methods and Deep Learning for Semantic Mapping

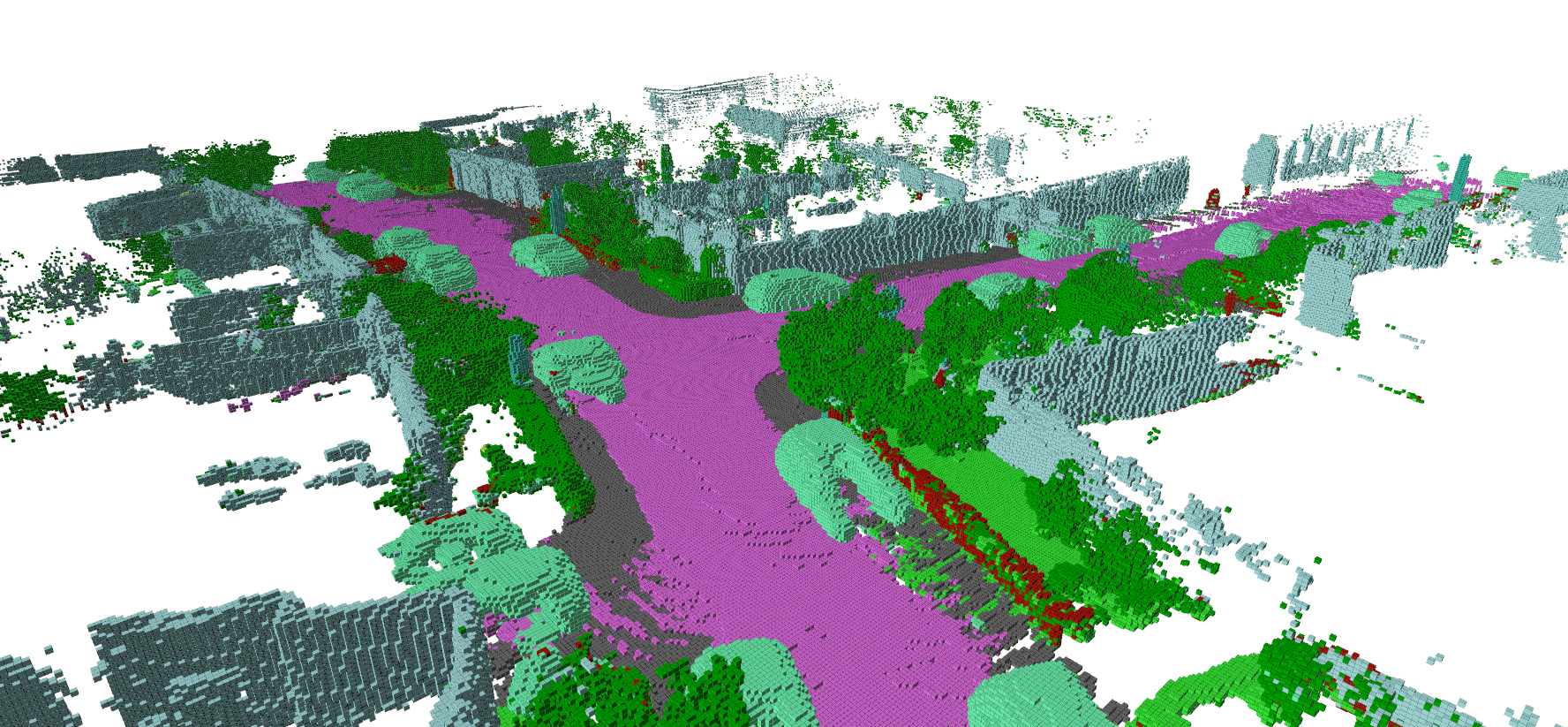

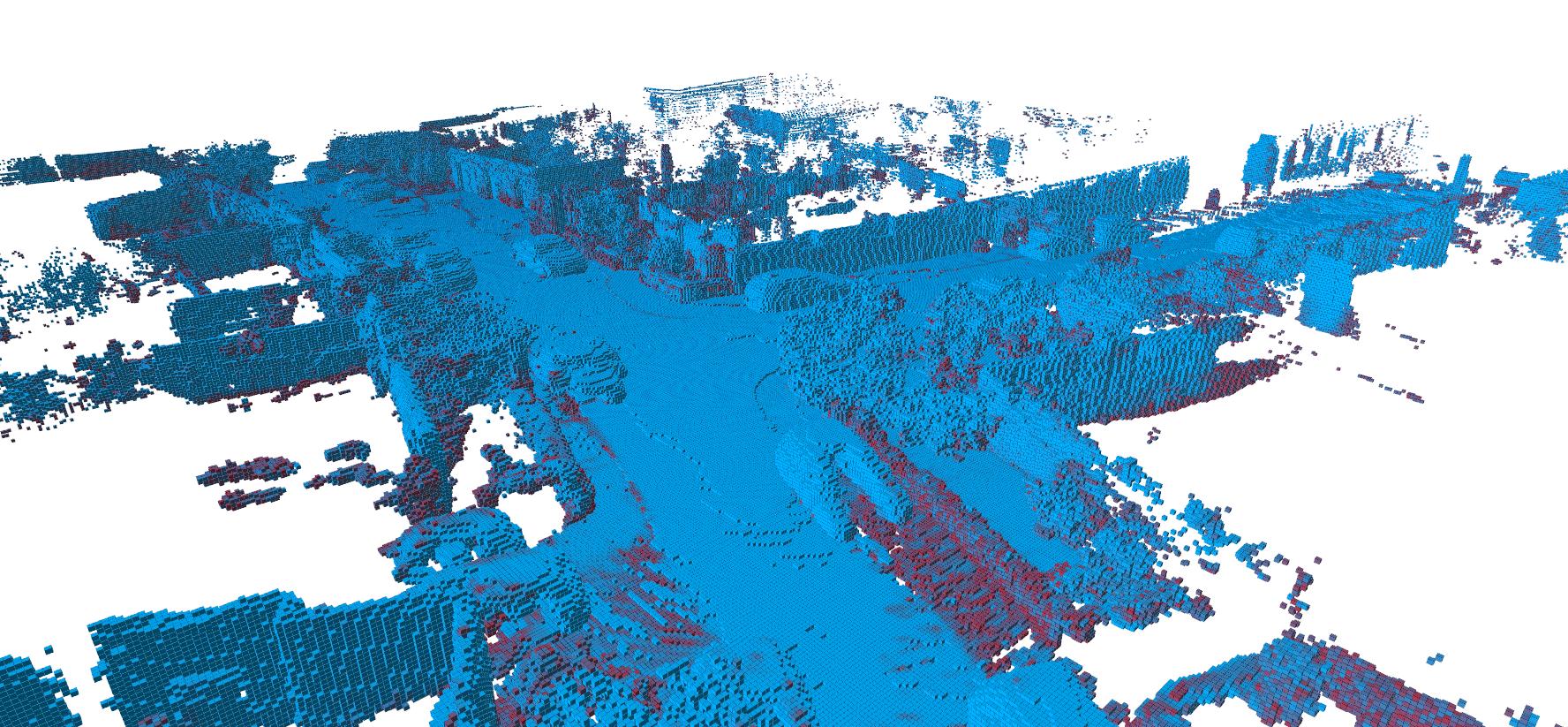

Robotic perception is at a crossroads between classical, hand-crafted methods, and modern, implicit architectures. While probabilistic methods offer quantifiable uncertainty and the ability to transfer between data sets due to robust algorithms, they are generally slow and hand-crafted. In contrast, modern deep learning methods trade more efficient and optimized results for less reliability when exposed to new data due to operating in an implicit space. We propose to bridge the gap between Bayesian and deep learning-based mapping for real-time, robust mapping with quantifiable uncertainty and optimized performance in an algorithm called Convolutional Bayesian Kernel Inference (ConvBKI).

Dynamic Semantic Mapping

Whereas most modern methods propose to segment foreground from background, we model all objects within a single map for downstream tasks. Using velocity cues, we can embed a higher level of scene understanding in our map to reason about how objects are moving, thereby removing artifacts from dynamic objects.